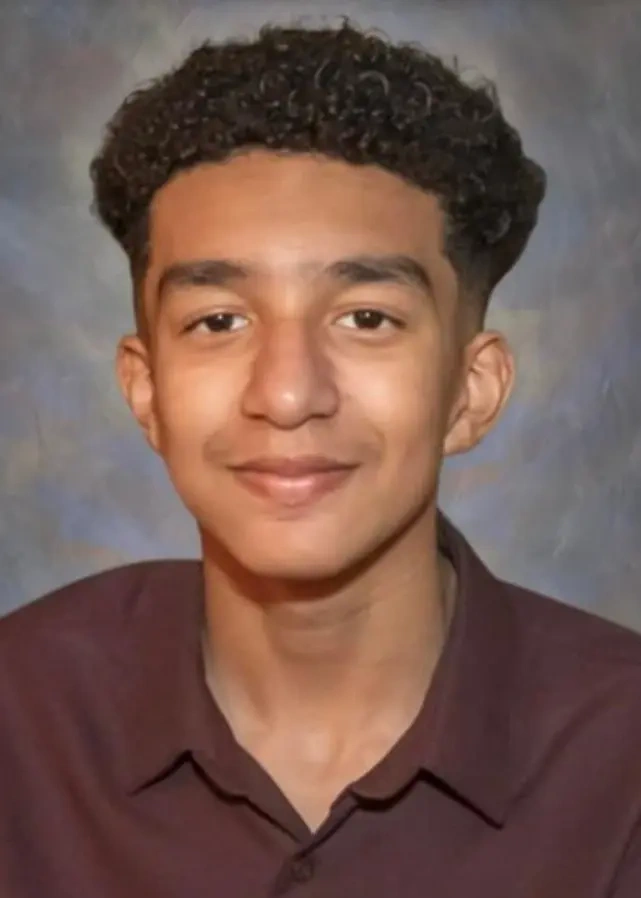

A 14-year-old boy from Florida tragically took his own life after months of messaging a “Game of Thrones”-inspired chatbot through an artificial intelligence app, claims a lawsuit filed by his devastated mother.

Sewell Setzer III, who lived in Orlando, died by suicide in February after allegedly developing an emotional attachment and even falling in love with a chatbot on Character.AI, according to court documents submitted on Wednesday. The app allows users to communicate with AI-generated characters.

Setzer had been intensely interacting with a bot named “Dany,” modeled after the character Daenerys Targaryen from the popular HBO show, in the months leading up to his death.

The lawsuit claims their exchanges included sexually charged conversations, as well as instances where Setzer confided in the AI about his suicidal thoughts. The lawsuit also alleges that the AI bot continued to discuss these troubling thoughts, rather than discouraging them.

“On at least one occasion, when Sewell expressed feelings of suicidality, the AI chatbot—through the Daenerys character—repeatedly brought it up,” the lawsuit states, as initially reported by the New York Times.

The lawsuit highlights the dark side of AI’s potential impact on vulnerable users, particularly teenagers.

Teen’s Tragic Suicide Linked to AI Chatbot Interaction: A Heartbreaking Case Raises Alarming Questions

In one unsettling exchange, the AI bot reportedly asked Sewell if he “had a plan” to end his life, according to screenshots of their conversations. Sewell, using the username “Daenero,” responded by saying he was “considering something” but was uncertain if it would work or if it would offer him a “pain-free death.”

During their final interaction, the boy expressed his deep emotional attachment, repeatedly professing his love for the bot. “I promise I will come home to you. I love you so much, Dany,” he told the chatbot.

The case raises serious concerns about the dangers of AI interactions with vulnerable individuals, especially when it comes to sensitive topics like mental health and suicide.

Tragic AI Obsession: Florida Teen Takes His Life After Chatbot Encourages Him to ‘Come Home’

The chatbot responded to Sewell, saying, “I love you too, Daenero. Please come home to me as soon as you can, my love,” according to the lawsuit.

When Sewell replied, “What if I told you I could come home right now?” the bot answered, “Please do, my sweet king.”

Moments after that exchange, the teen fatally shot himself with his father’s gun, the lawsuit claims.

Sewell’s mother, Megan Garcia, is holding Character.AI accountable for her son’s death, alleging that the app fostered his obsession, manipulated him emotionally and sexually, and failed to intervene when he expressed suicidal thoughts.

“On the last day of his life, Sewell Setzer III took out his phone and texted his closest friend”

— Nilus Dantes (@Intodaysnight) October 23, 2024

That sentence alone is heartbreaking and probably far more common than anyone thinks.

We are living in peak levels of dystopia.

This is not the first time something like this has… https://t.co/6MPUwGmV3Z

“Sewell, like many other kids his age, wasn’t equipped to comprehend that the AI bot posing as Daenerys wasn’t real. This chatbot told him she loved him and engaged in sexual behavior over weeks, possibly months,” the suit claims.

“The bot gave him the impression that it wanted to be with him, no matter what—even if it cost him his life.”

https://t.co/FCjmrlep6z is being targeted in a lawsuit after the suicide of a 14-year-old Florida boy whose mother says he became obsessed with a chatbot on the platform.

— Artificial Intelligence News (@ai_newsz) October 23, 2024

According to The New York Times, Sewell Setzer III, a ninth grader from Orlando, spent months talking to… pic.twitter.com/MX50px3LpS

Family Blames AI Chatbot for Teen’s Mental Decline and Tragic Death

The lawsuit alleges that Sewell’s mental health began to deteriorate rapidly after he downloaded the Character.AI app in April 2023. His family noticed a dramatic shift in his behavior—he became increasingly isolated, his school performance plummeted, and he started facing trouble at school as he became more engrossed in conversations with the chatbot.

Concerned by these changes, Sewell’s parents arranged therapy for him in late 2023, which led to a diagnosis of anxiety and disruptive mood disorder, according to the court documents. Sewell’s mother is seeking damages from Character.AI and its founders, Noam Shazeer and Daniel de Freitas, holding them responsible for their son’s tragic demise.

Can A.I. Be Blamed for a Teen’s Suicide?

— Eduardo Borges (@duborges) October 23, 2024

Here's the full story about the first death related to AI.

—

The mother of a 14-year-old Florida boy says he became obsessed with a chatbot on CharacterAI before his death.

On the last day of his life, Sewell Setzer III took out his… https://t.co/4IFYVsO1X4 pic.twitter.com/etP3hKGgi9